Compute shaders with David Lynch

Yesterday, 16 January 2025, the world lost a pure genius and a great visionary. David Lynch taught me to love the beauty of abstract and not to look for answers but for the right questions. His art shaped me in so many different ways, and I am pretty sure this project is also partly inspired by his love of the absurd. Today’s post will be a tribute to David Lynch who is always in my mind.

From Compute shader to Frag Shaders

Today while working on histogram 2.0, we are finally passing the data from compute shaders to rendering shaders. This is what I was waiting for as it’s closer to real-life example!

We calculate the data for histogram in one compute shader and store the data:

const bindGroup = device.createBindGroup({

layout: drawHistogramPipeline.getBindGroupLayout(0),

entries: [

{ binding: 0, resource: { buffer: chunksBuffer, size: chunkSize * 4 * 4 } },

{ binding: 1, resource: { buffer: uniformBuffer } },

{ binding: 2, resource: { buffer: scaleBuffer } },

],

});

We also added one more compute shader to calculate heights of each histogram bar, which I am not going to put here otherwise it’s too much code. The most important part that we also get scale from there. And then we pass everyting into the Fragment shader:

const bindGroup = device.createBindGroup({

layout: drawHistogramPipeline.getBindGroupLayout(0),

entries: [

{ binding: 0, resource: { buffer: chunksBuffer, size: chunkSize * 4 * 4 } },

{ binding: 1, resource: { buffer: uniformBuffer } },

{ binding: 2, resource: { buffer: scaleBuffer } },

],

});

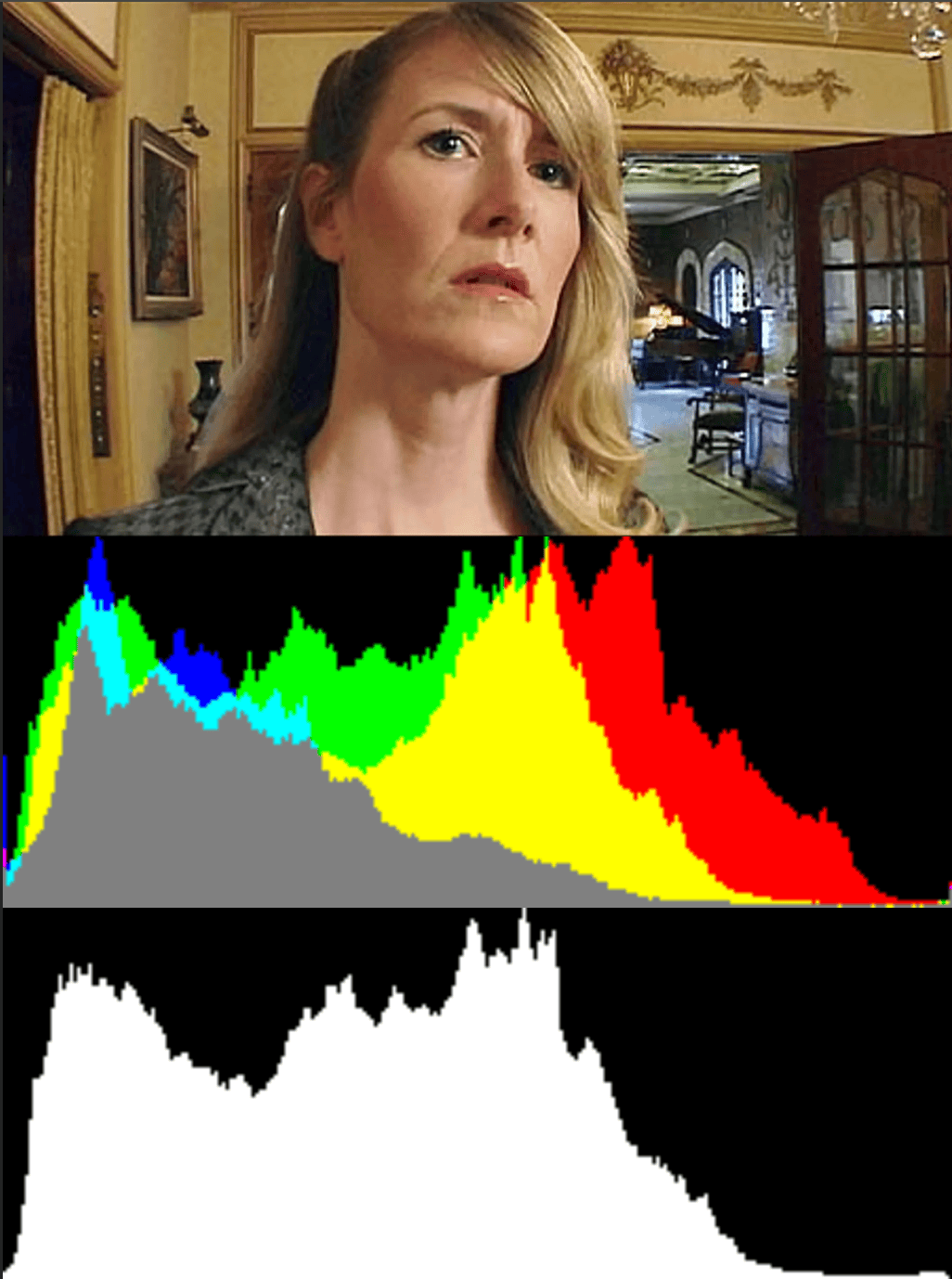

The outcome is the same histograms, but drawn in GPU instead of javascript:

Laura Dern in Inland Empire, 2006

Laura Dern in Inland Empire, 2006

To sum up: we have 3 compute pipelines here:

histogramChunkPipelinewhere we calculate histogram chunk by chunk;chunkSumPipelinewhere we gather all the histogram chunks from workroups into one chunk;scalePipelinewhere we compute the height of each bar of histogram;

and 1 render pipeline

drawHistogramPipeline, where we read histogram data chunks and scale data and based on that render the histogram.

Now can we do that on the same canvas on each frame?

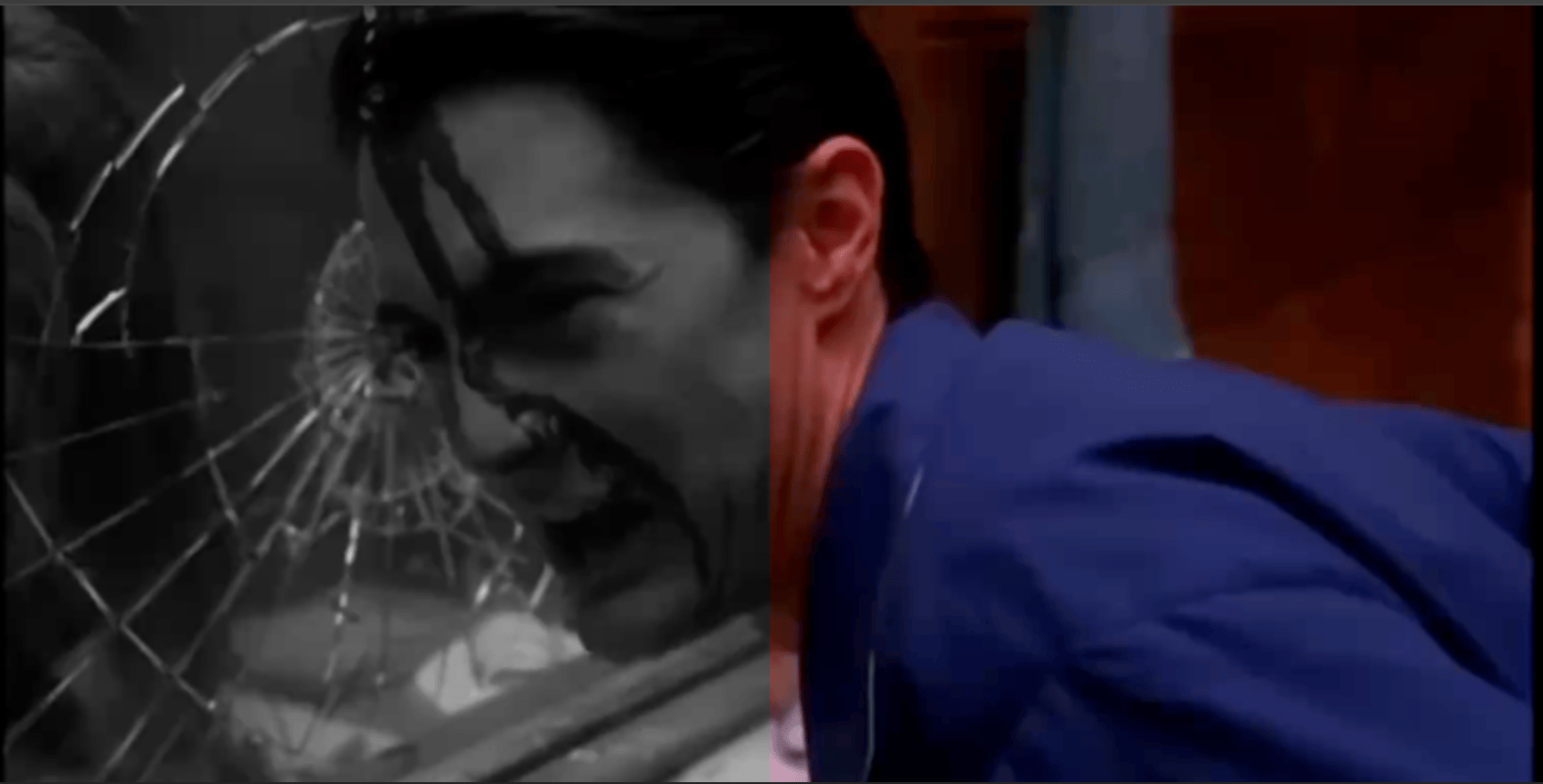

Dale Cooper becomes possesed by Bob. Ending of Twin Peaks, Season 2, 1991

Here we call our render function on each frame using well-known requestAnimationFrame(render).

Bindgroups are also now created on each frame inside of render function.

An inportant moment is that we also created a separate render pipeline to render a video:

const videoPipeline = device.createRenderPipeline({

label: 'hardcoded video textured quad pipeline',

layout: 'auto',

vertex: {

module: videoModule,

},

fragment: {

module: videoModule,

targets: [{ format: presentationFormat }],

},

});

const videoSampler = device.createSampler({

magFilter: 'linear',

minFilter: 'linear',

});

And this is the end of WebGPU Fundamentals compute shaders articles! Now lets try to tweak the given example ourselves.

At first, I got rid of histogram and tried to make half of the video black and white:

Yes, I know, we don’t need compute shader for that and this can be done in Frag shader, but we’re getting there.

Next, I changed the chunkSizes and the asked to make only red-ish pixels to become black and white (which in this case worked as blue segmentation). This is my new compute shader:

const k = {

chunkWidth: 16,

chunkHeight: 16,

};

const chunkSize = k.chunkWidth * k.chunkHeight;

const sharedConstants = Object.entries(k).map(([k, v]) => `const ${k} = ${v};`).join('\n');

const bwChunkModule = device.createShaderModule({

label: 'bw shader',

code: `

${sharedConstants}

struct Chunk {

pixels: array<vec4f, ${chunkSize}>

};

@group(0) @binding(0) var ourTexture: texture_external;

@group(0) @binding(1) var outputTexture: texture_storage_2d<rgba8unorm, write>;

@group(0) @binding(2) var<storage, read_write> chunks: array<Chunk>;

const kSRGBLuminanceFactors = vec3f(0.2126, 0.7152, 0.0722);

fn srgbLuminance(color: vec3f) -> f32 {

return saturate(dot(color, kSRGBLuminanceFactors));

}

@compute @workgroup_size(chunkWidth, chunkHeight, 1)

fn cs(

@builtin(global_invocation_id) global_id: vec3u,

@builtin(workgroup_id) workgroup_id: vec3u,

@builtin(local_invocation_id) local_id: vec3u,

) {

let size = textureDimensions(ourTexture);

let position = global_id.xy;

if (all(position < size)) {

var color = textureLoad(ourTexture, position);

// Check if the pixel is in the left half of the video

// if (position.x < size.x / 2u) {

//check if the pixel is red

if (color.r > color.g && color.r > color.b) {

let luminance = srgbLuminance(color.rgb);

color = vec4f(luminance, luminance, luminance, color.a);

}

//}

// Store in chunk

let chunk_idx = workgroup_id.y * (size.x / chunkWidth) + workgroup_id.x;

let pixel_idx = local_id.y * chunkWidth + local_id.x;

chunks[chunk_idx].pixels[pixel_idx] = color;

// Write to output texture

textureStore(outputTexture, position, color);

}

}

`,

});

and this is the outcome:

Okay, but what’s the point of compute shaders if we don’t store anything between the frames? As per Wouter’s consult I will be looking into post-processing, it’s a nice moment to creat a little post-processing myself.

I think our Twin Peaks finale would benefit from a little motion blur. This is purely to get better understanding how wgsl compute shaders work, so I am not even googling how it actually works, my guess is to store previous frames and blend them with the current one.

I have started with storing 4 frames and did not see any result.

Maybe we need to store more?

struct Chunk {

frame0: array<vec4f, ${chunkSize}>, // Most recent previous frame

frame1: array<vec4f, ${chunkSize}>,

frame2: array<vec4f, ${chunkSize}>,

frame3: array<vec4f, ${chunkSize}>,

frame4: array<vec4f, ${chunkSize}>,

frame5: array<vec4f, ${chunkSize}>,

frame6: array<vec4f, ${chunkSize}>,

frame7: array<vec4f, ${chunkSize}>,

frame8: array<vec4f, ${chunkSize}>,

frame9: array<vec4f, ${chunkSize}> // Oldest frame

};

At this point I got an error that my buffer is a little bit too big: Buffer size (279183360) exceeds the max buffer size limit (268435456).

So it means you probably can store around 9 pictures in one buffer which is quite a lot!

Then I realized that the problem is not how many frames we take, but the difference between them. So I want to check which frame is that and save every n-th frame:

const motionBlurModule = device.createShaderModule({

label: 'motion blur shader',

code: `

${sharedConstants}

struct Settings {

frameInterval: u32,

currentFrameWeight: f32,

frame0Weight: f32,

frame1Weight: f32,

frame2Weight: f32,

frame3Weight: f32,

};

struct Chunk {

frame0: array<vec4f, ${chunkSize}>, // Most recent saved frame

frame1: array<vec4f, ${chunkSize}>,

frame2: array<vec4f, ${chunkSize}>,

frame3: array<vec4f, ${chunkSize}> // Oldest saved frame

};

@group(0) @binding(0) var ourTexture: texture_external;

@group(0) @binding(1) var outputTexture: texture_storage_2d<rgba8unorm, write>;

@group(0) @binding(2) var<storage, read_write> chunks: array<Chunk>;

@group(0) @binding(3) var<storage, read_write> frameCounter: array<u32, 1>;

@group(0) @binding(4) var<uniform> settings: Settings;

@compute @workgroup_size(chunkWidth, chunkHeight, 1)

fn cs(

@builtin(global_invocation_id) global_id: vec3u,

@builtin(workgroup_id) workgroup_id: vec3u,

@builtin(local_invocation_id) local_id: vec3u,

) {

let size = textureDimensions(ourTexture);

let position = global_id.xy;

if (all(position < size)) {

let chunk_idx = workgroup_id.y * (size.x / chunkWidth) + workgroup_id.x;

let pixel_idx = local_id.y * chunkWidth + local_id.x;

let currentColor = textureLoad(ourTexture, position);

// Update frame counter with single thread

if (global_id.x == 0u && global_id.y == 0u) {

frameCounter[0] = frameCounter[0] + 1u;

}

// Store frames every N frames for all pixels

if (frameCounter[0] % settings.frameInterval == 0u) {

let prev0 = chunks[chunk_idx].frame0[pixel_idx];

let prev1 = chunks[chunk_idx].frame1[pixel_idx];

let prev2 = chunks[chunk_idx].frame2[pixel_idx];

chunks[chunk_idx].frame3[pixel_idx] = prev2;

chunks[chunk_idx].frame2[pixel_idx] = prev1;

chunks[chunk_idx].frame1[pixel_idx] = prev0;

chunks[chunk_idx].frame0[pixel_idx] = currentColor;

}

let prev0 = chunks[chunk_idx].frame0[pixel_idx];

let prev1 = chunks[chunk_idx].frame1[pixel_idx];

let prev2 = chunks[chunk_idx].frame2[pixel_idx];

let prev3 = chunks[chunk_idx].frame3[pixel_idx];

let blendedColor = currentColor * settings.currentFrameWeight +

prev0 * settings.frame0Weight +

prev1 * settings.frame1Weight +

prev2 * settings.frame2Weight +

prev3 * settings.frame3Weight;

textureStore(outputTexture, position, blendedColor);

}

}

`,

});

I also added gui to be able to change the step between frames. On the maximum threshold around 120 frames we get this beautiful trippy not-motion-blur:

I am quite happy with the result! Next I will try to use WGSL in more complex setups and finally start working on the setting up the scene for the experience.