Textures in WebGPU

Vertex and Storage buffers

Before going further to textures, I’ve went through storage & vertex buffers lessons. Buffer data is a new concept for me to use in coding, but it’s one of essentials used in WGSL if you want to go further than triangles.

I already used Uniform Buffers in previous lesson, now we’re looking into Storage and Vertex buffers.

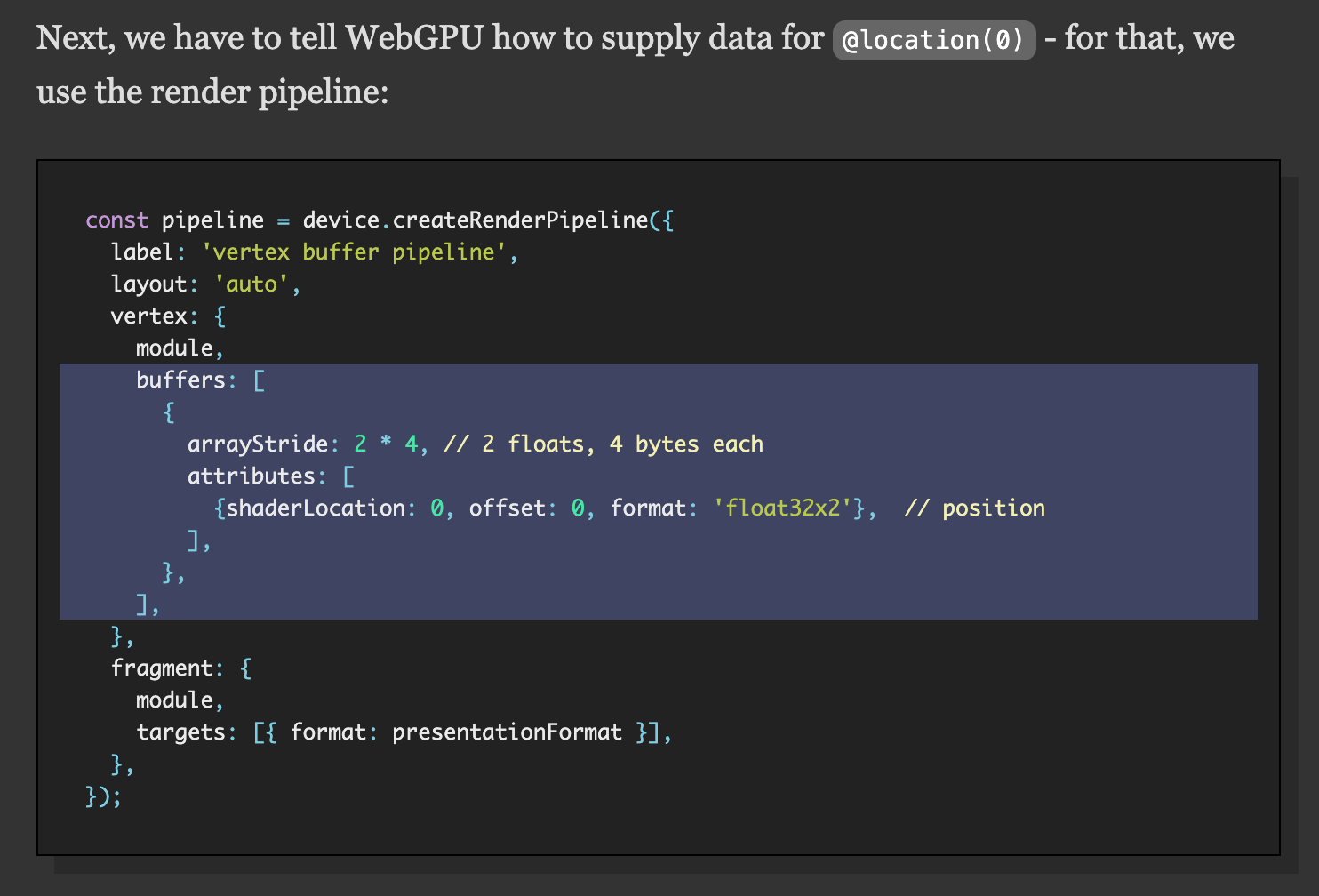

One of the main differences of Vertex buffer is how shaders access it. We need to explain to WebGPU what it is and how it’s organized:

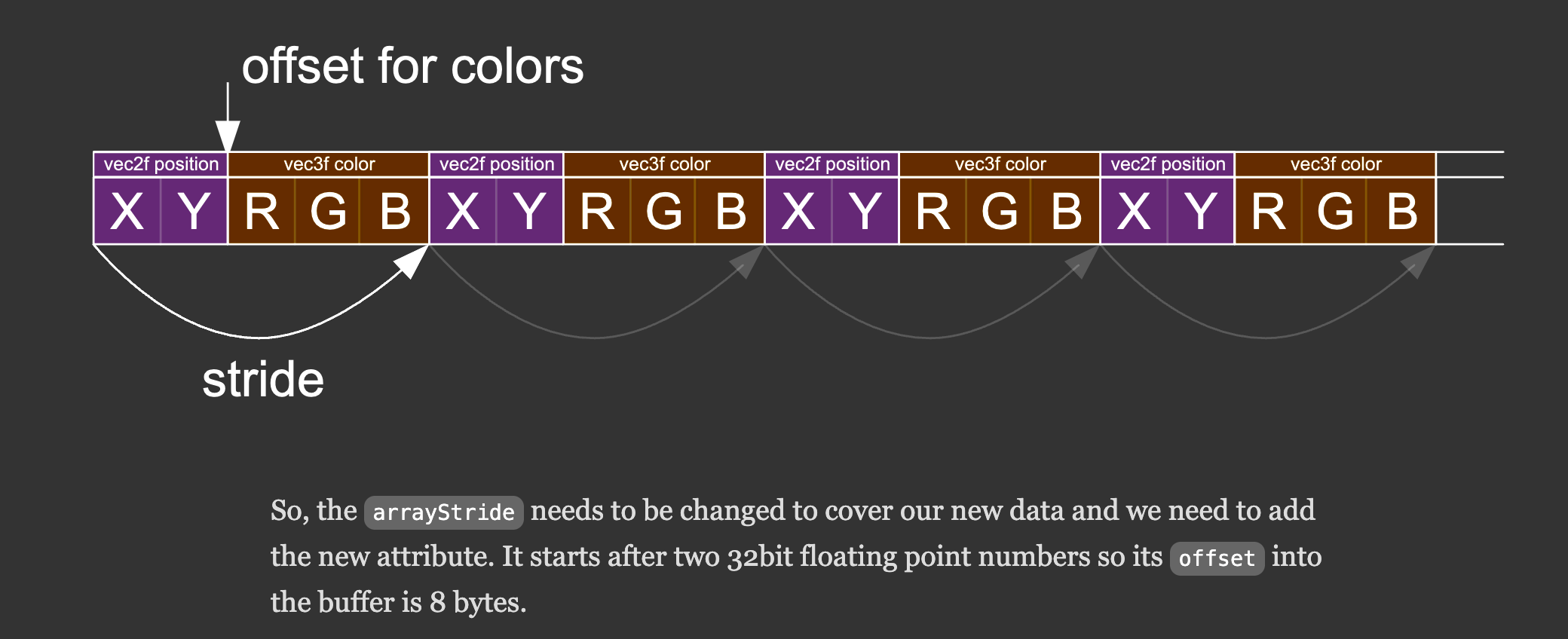

To the vertex entry of the pipeline descriptor we added a buffers array which is used to describe how to pull data out of 1 or more vertex buffers. For our first and only buffer, we set an arrayStride in number of bytes. A stride in this case is how many bytes to get from the data for one vertex in the buffer, to the next vertex in the buffer.

After several examples I also understood how to use the stride offset when passing attributes to shader:

buffers: [

{

arrayStride: 5 * 4, // 2 floats, 4 bytes each

attributes: [

{ shaderLocation: 0, offset: 0, format: 'float32x2' }, // position

{ shaderLocation: 4, offset: 8, format: 'float32x3' }, // perVertexColor

],

},

...

]

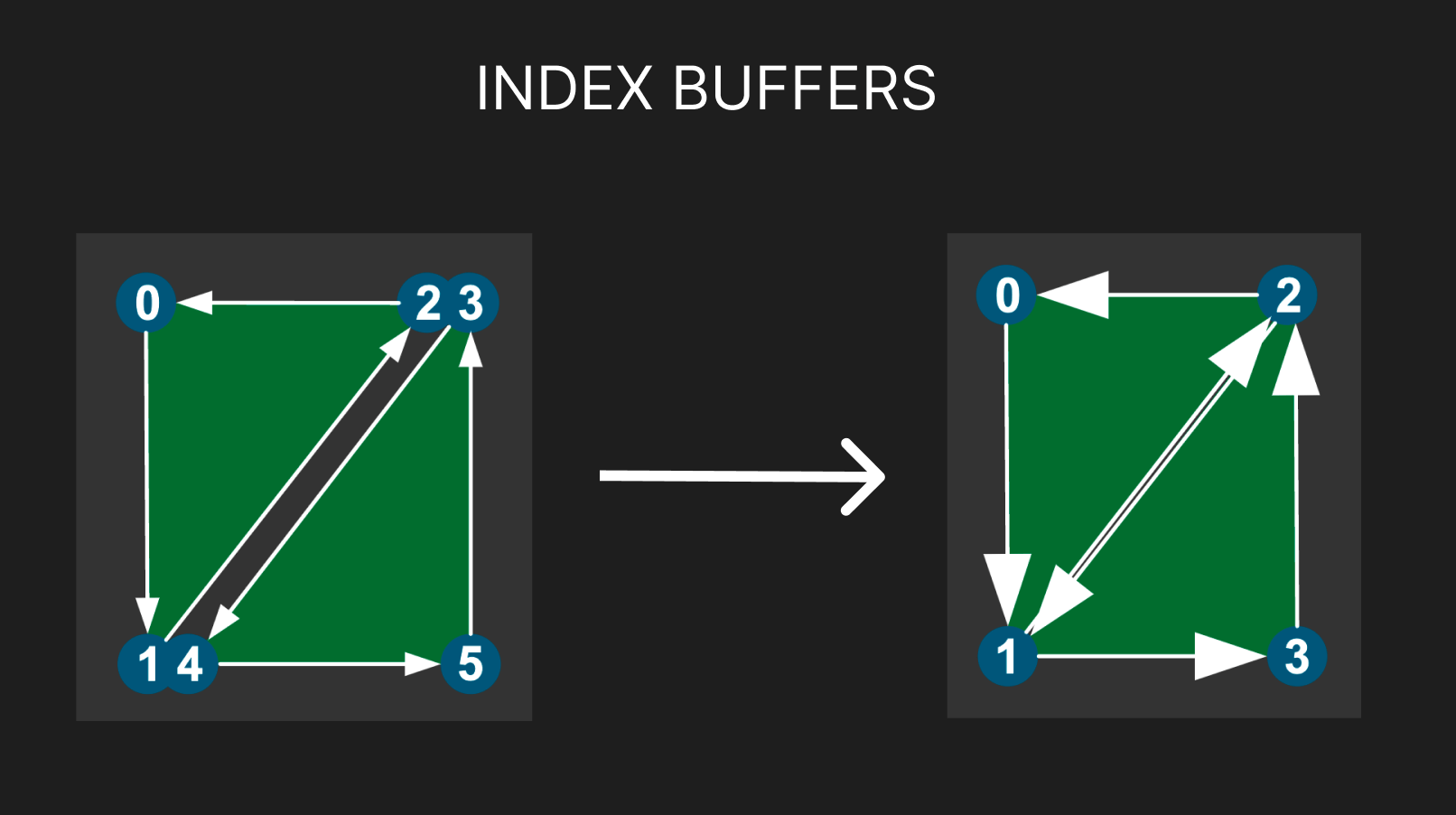

Index buffers

For optimizing vertices computaion, we can use index buffers to re-use existing vertices:

Then the code turns into this, creating vertices and also Index Data:

function createCircleVertices({

radius = 1,

numSubdivisions = 24,

innerRadius = 0,

startAngle = 0,

endAngle = Math.PI * 2,

} = {}) {

// 2 vertices at each subdivision, + 1 to wrap around the circle.

const numVertices = (numSubdivisions + 1) * 2;

// const vertexData = new Float32Array(numSubdivisions * 2 * 3 * 2);

const vertexData = new Float32Array(numVertices * (2 + 3));

let offset = 0;

const addVertex = (x, y, r, g, b) => {

vertexData[offset++] = x;

vertexData[offset++] = y;

vertexData[offset++] = r;

vertexData[offset++] = g;

vertexData[offset++] = b;

};

const innerColor = [0.3, 0.3, 0.9];

const outerColor = [0.9, 0.9, 0.9];

// 2 triangles per subdivision

//

// 0 2 4 6 8 ...

//

// 1 3 5 7 9 ...

for (let i = 0; i <= numSubdivisions; ++i) {

const angle = startAngle + (i + 0) * (endAngle - startAngle) / numSubdivisions;

const c1 = Math.cos(angle);

const s1 = Math.sin(angle);

addVertex(c1 * radius, s1 * radius, ...outerColor);

addVertex(c1 * innerRadius, s1 * innerRadius, ...innerColor);

}

const indexData = new Uint32Array(numSubdivisions * 6);

let ndx = 0;

// 1st tri 2nd tri 3rd tri 4th tri

// 0 1 2 2 1 3 2 3 4 4 3 5

//

// 0--2 2 2--4 4 .....

// | / /| | / /|

// |/ / | |/ / |

// 1 1--3 3 3--5 .....

for (let i = 0; i < numSubdivisions; ++i) {

const ndxOffset = i * 2;

// first triangle

indexData[ndx++] = ndxOffset;

indexData[ndx++] = ndxOffset + 1;

indexData[ndx++] = ndxOffset + 2;

// second triangle

indexData[ndx++] = ndxOffset + 2;

indexData[ndx++] = ndxOffset + 1;

indexData[ndx++] = ndxOffset + 3;

}

return {

vertexData,

indexData,

numVertices: indexData.length,

};

}

We then set Index Buffer as well to pass our index data. We also need to call different draw function:

pass.drawIndexed(numVertices, kNumObjects);

And we saved 33% vertices, slay!

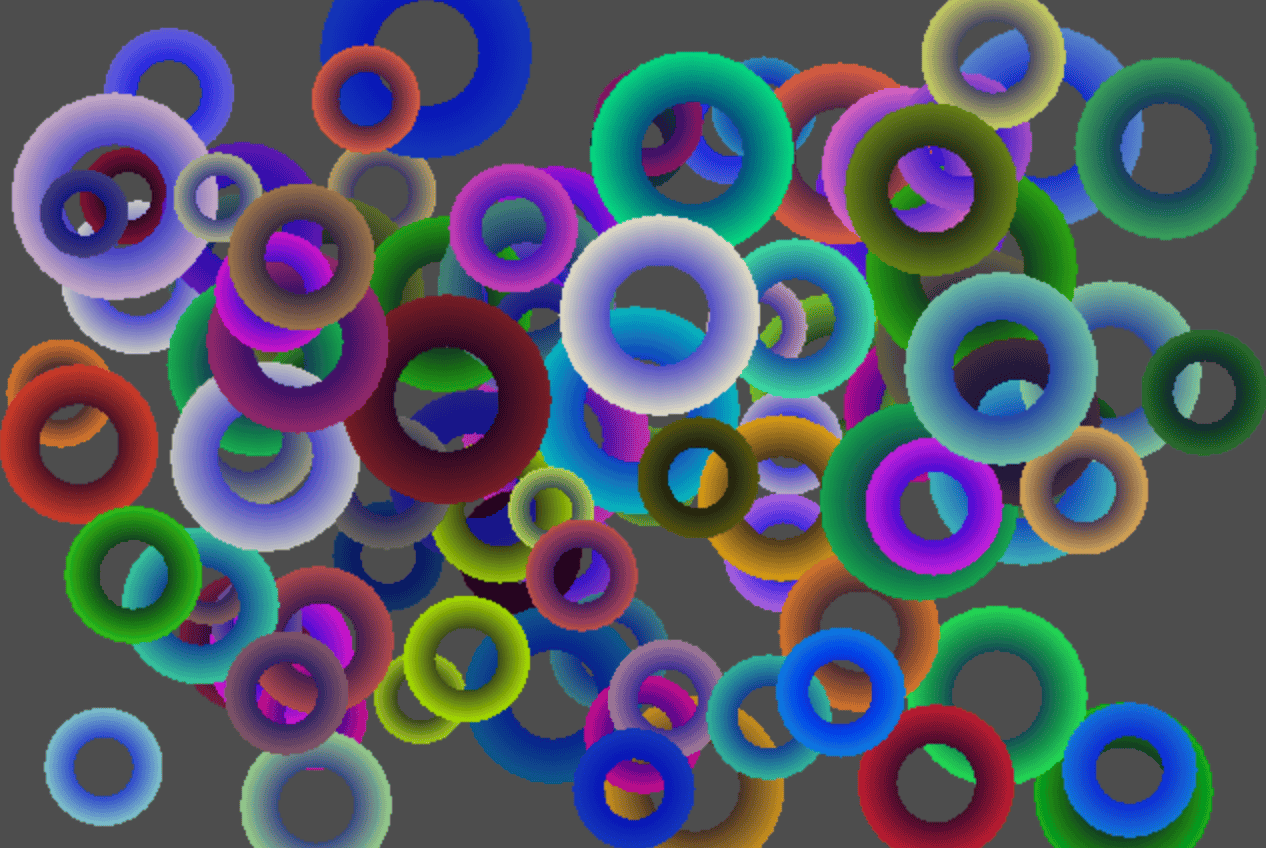

This is what’s we’re getting by the way:

Now we’re ready to go to textures.

Textures

Okay, we’re finally at the last part of passing data into the WebGPU shaders!

The major difference for textures is that they are accessed by sampler, which can blend up to 16 values together in a texture. As if there was not enough new information before yay…

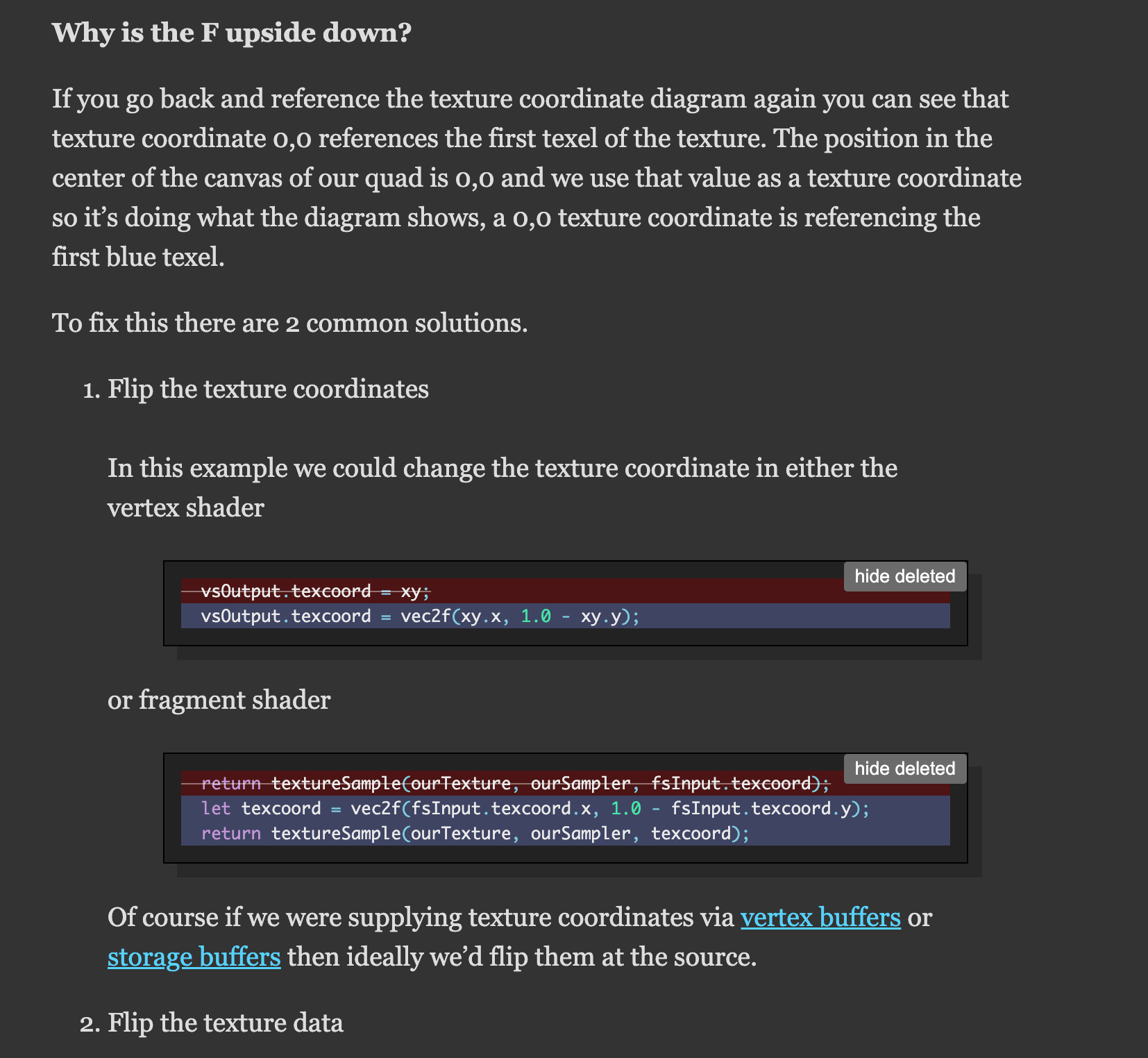

The first thing I noticed when started following the lesson is flipped textures, which we faced a lot during Bachelor Thesis, and here was the eplanation for that:

It was also nice finally to see familiar things about UV explanation and mixing, this is something I’ve encountered before and is easier for comprehension=)

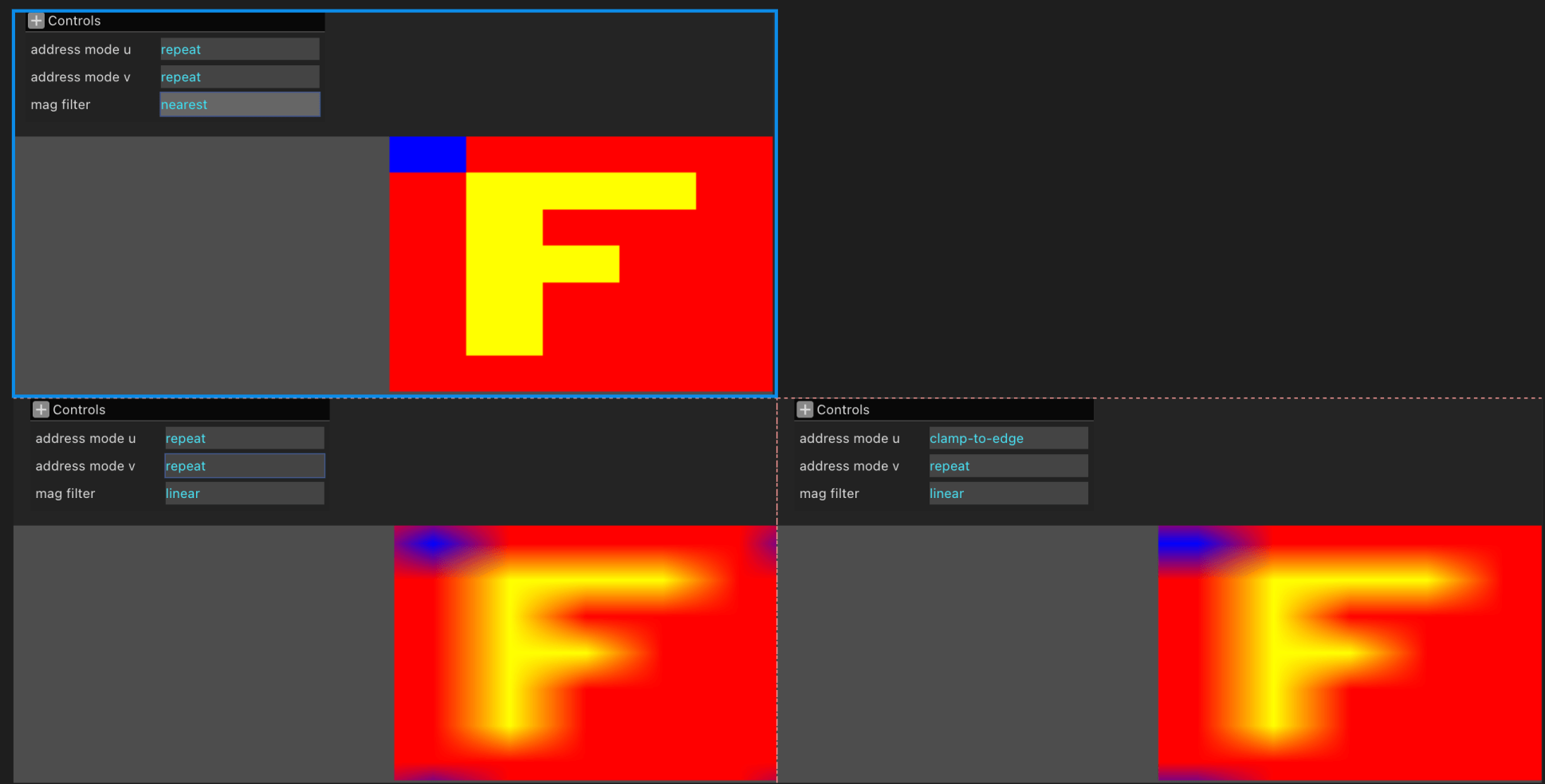

I think I finally understood meaning of magFilter and clamping textures to edge / repeating, which I saw in three js before very briefly:

It’s nice knowing that even if I am not writing shaders low-level daily, I understand how graphics api works in general better!